Apache Iceberg is an open table format designed to bring database-like reliability and consistency to data lakes. Originally developed at Netflix, Iceberg introduces a metadata abstraction layer on top of raw data files (Parquet, ORC, Avro), enabling versioned and transactional access at scale.

In this post, we’ll explore how Iceberg compares to traditional S3 Parquet data lakes, how it sits on S3, its key features, and how it fits into modern AWS architectures. We are going to walk you through on key features that Apache Iceberg enables and how to utilize these features in Amazon Athena on a high level.

What is Apache Iceberg

At its core, Apache Iceberg is a table format that manages large analytic datasets on object storage like S3. Unlike traditional Parquet-only setups, Iceberg separates data storage from metadata management, introducing a snapshot-based layer that tracks files, partitions, and table state.

In traditional S3 + Parquet solution the data is stored directly to S3, usually organized by partition folders and AWS Glue catalog stores the table schemas and partition information. Iceberg too stores the data in Parquet format in S3, but metadata and snapshots also live in S3. Glue acts as a catalog for Iceberg tables enabling Athena to query them.

Key Features of Apache Iceberg

Iceberg introduces several capabilities that traditional S3 + Parquet lakes cannot offer natively:

1. ACID Transactions

Traditional Parquet files have no transactional support. Iceberg’s snapshot mechanism ensures atomic commits, isolated reads, and consistent tables even with concurrent writes.

2. Schema Evolution

With Parquet-only lakes, schema changes are cumbersome. Iceberg tracks schema versions:

- Add, rename, or drop columns safely.

- Queries against old snapshots continue to work seamlessly.

3. Hidden Partitioning

Partitioning in classic S3 lakes requires exposing column values in folder structures, leading to:

- Manual overhead.

- Difficulties handling evolving data.

Iceberg abstracts this via partition spec metadata, allowing queries to optimize scans automatically.

4. Time Travel

Traditional lakes cannot query historical data without manual backups. Iceberg’s snapshot system allows:

- Querying past table states.

- Auditing and rollback.

- Reproducing historical reports.

5. Efficient Updates and Deletes

Parquet-based lakes require rewriting entire partitions to update or delete records. Iceberg supports incremental writes, upserts, and deletes, minimizing S3 read/write overhead.

6. Performance Optimization

Iceberg maintains manifest lists and metadata trees, reducing expensive S3 file listings for queries. It can compact small files automatically, improving Athena and Spark performance.

Considerations

Using Apache Iceberg does have a couple of things to consider. The main drawback of the format is the added operational overhead. The additional metadata that makes Iceberg work needs some maintenance to keep working smoothly. The three big maintenance operations are snapshot expiration, old metadata file removal and deleting orphan files. Additionally, some tables with frequent small write operations should have their small data files compacted into larger files regularly.

Working with Apache Iceberg in Athena

To demonstrate the key features of Apache Iceberg on AWS, we’ll be using Amazon Athena. We’ve created an Iceberg table in S3 by running the following SQL in Athena:

CREATE TABLE orders (

id string,

price bigint,

product string,

customer string,

ts timestamp)

PARTITIONED BY (day(ts))

LOCATION 's3://bucket-name/example/'

TBLPROPERTIES (

'table_type' ='ICEBERG'

)

Next, we will add some rows to our table. For demonstration purposes, we do this with two separate SQL statements:

INSERT INTO orders VALUES

('ORD-001', 29.99, 'Laptop Stand', 'Alice Johnson', TIMESTAMP '2024-03-15 09:23:41'),

('ORD-002', 149.50, 'Wireless Keyboard', 'Bob Smith', TIMESTAMP '2024-03-15 10:15:22'),

('ORD-003', 79.99, 'USB-C Hub', 'Carol Davis', TIMESTAMP '2024-03-15 11:47:33'),

('ORD-004', 199.99, 'Monitor', 'David Wilson', TIMESTAMP '2024-03-15 14:30:15'),

('ORD-005', 45.00, 'Mouse Pad', 'Emma Brown', TIMESTAMP '2024-03-15 16:22:50');

INSERT INTO orders VALUES

('ORD-006', 89.99, 'Webcam', 'Frank Miller', TIMESTAMP '2024-03-16 08:45:12'),

('ORD-007', 129.99, 'Headphones', 'Grace Lee', TIMESTAMP '2024-03-16 10:33:28'),

('ORD-008', 34.50, 'Cable Organizer', 'Henry Taylor', TIMESTAMP '2024-03-16 12:18:45'),

('ORD-009', 249.99, 'Desk Lamp', 'Iris Martinez', TIMESTAMP '2024-03-16 15:05:33'),

('ORD-010', 59.99, 'Ergonomic Mouse', 'Jack Anderson', TIMESTAMP '2024-03-16 17:42:19');

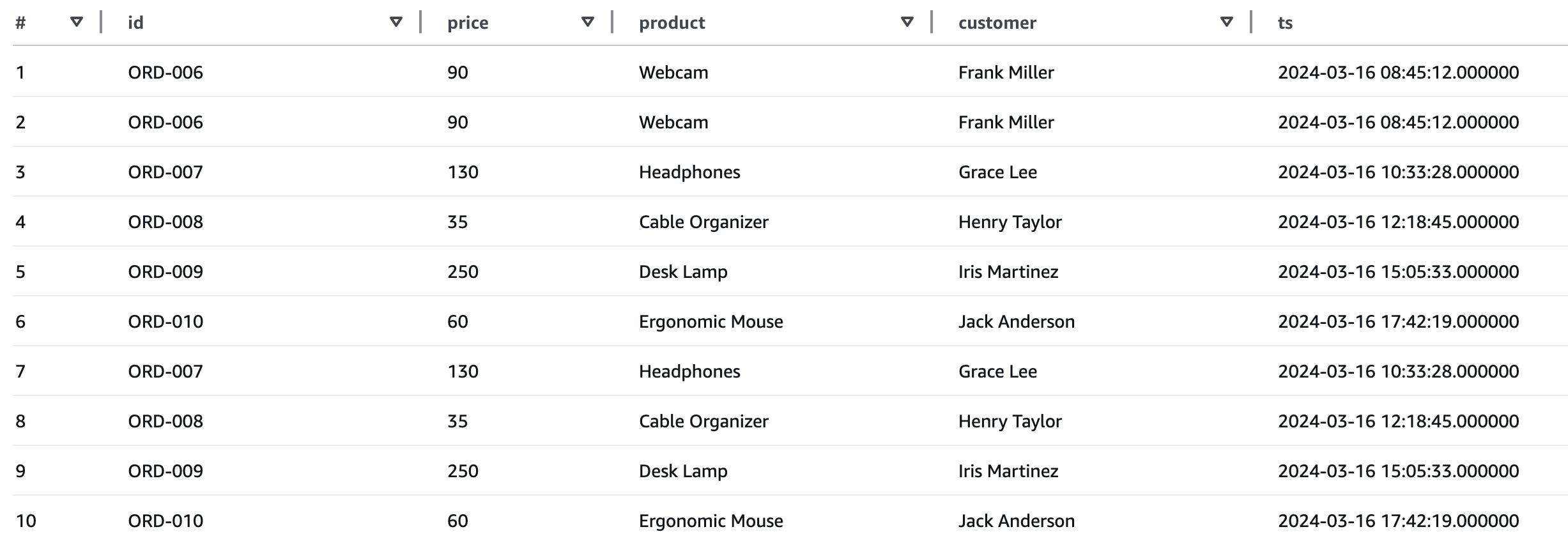

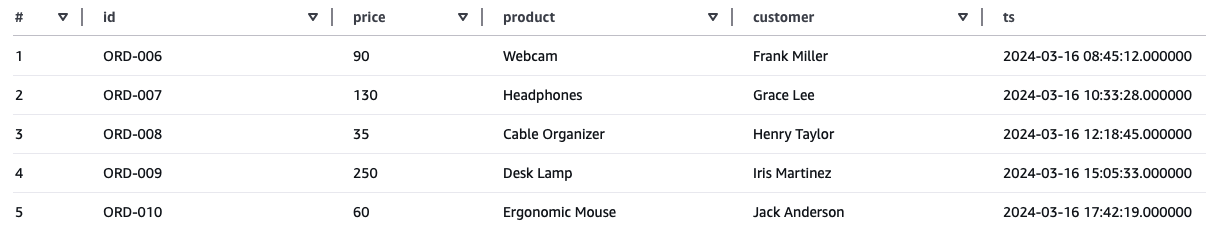

After running these commands, we have a table that looks like this:

Initial state of our Iceberg table

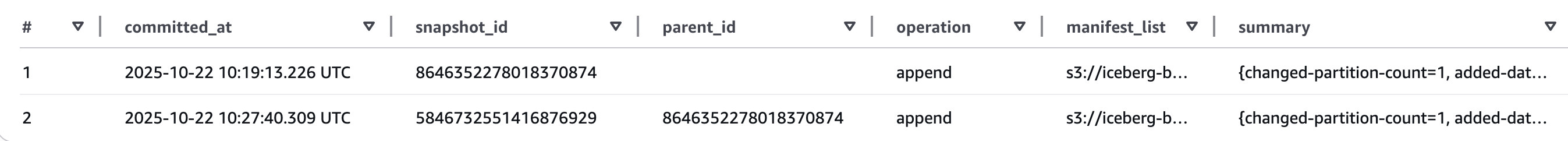

Here’s a good point to stop and look at a core feature of Apache Iceberg: snapshots. Since we inserted our initial rows with two SQL statements, there are two snapshots in the Iceberg table’s history. We can query an Iceberg table’s metadata to learn more:

SELECT * FROM "orders$snapshots"

Notice the special $ sign that indicates what metadata we want to query. Other options include $history, $partitions, and $files.

Snapshots from our Iceberg table

We can use the snapshots for some of Iceberg’s special features we talked about earlier in our blog post, like time traveling and rollbacks. More on this in a little bit, but first let’s look at another Iceberg feature: schema evolution. In a traditional database or data lake, changing the schema of the data would require a lot of implementation work. However, when using an Iceberg data lake, schema changes can be done with a single SQL statement:

-- Add a new column to the table

ALTER TABLE orders ADD COLUMNS (order_status string);

-- Insert rows with the new column

INSERT INTO orders VALUES

('ORD-011', 159.99, 'Mechanical Keyboard', 'Karen White', TIMESTAMP '2024-03-17 09:12:30', 'shipped'),

('ORD-012', 399.99, '4K Monitor', 'Luke Harris', TIMESTAMP '2024-03-17 11:25:45', 'processing'),

('ORD-013', 75.50, 'Mouse', 'Mary Clark', TIMESTAMP '2024-03-17 13:40:18', 'delivered'),

('ORD-014', 189.99, 'Standing Desk Converter', 'Nathan King', TIMESTAMP '2024-03-18 08:33:22', 'shipped'),

('ORD-015', 49.99, 'Blue Light Glasses', 'Olivia Scott', TIMESTAMP '2024-03-18 14:55:10', 'pending');

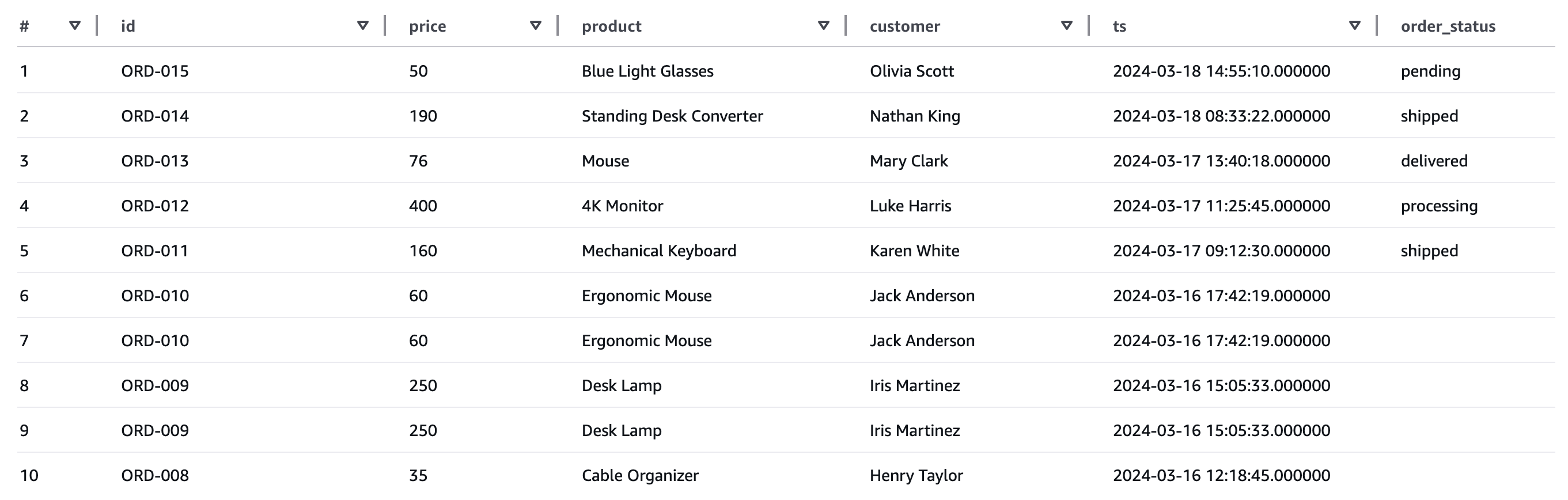

Let’s take a look at our table’s contents after editing the schema:

SELECT * FROM orders ORDER BY ts DESC LIMIT 10;

Results after schema modification

We are able to successfully query all rows, even the ones from before that did not have the order_status column when initially inserting. The rows that existed before adding the order_status simply have null values for the new column.

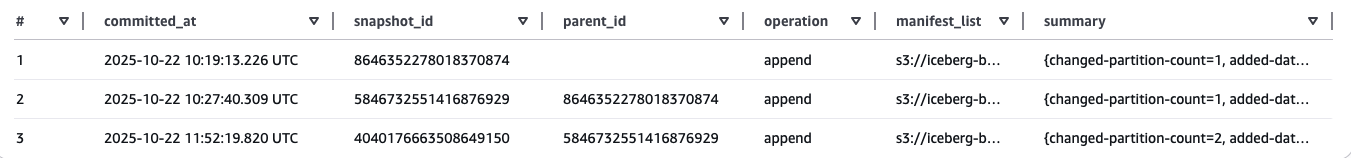

Now that we have made several actions to our table, let’s circle back and take another look at snapshots. We should now have three of them, one for each time we added data to our table.

Snapshots from our Iceberg table

Let’s use an amazing feature of Iceberg that the snapshots enable: time traveling. We can query the state of the table at different points in time by adding one simple clause to an SQL query. The following query will select all rows up until the specified timestamp:

- Note: the timestamp is the time when the snapshot was created, not to be confused with the

tscolumn in our example data

SELECT * FROM orders

FOR TIMESTAMP AS OF TIMESTAMP '2025-10-22 10:19:13.226 UTC';

Results from the time travel query

The snapshot we queried contains only the first 5 rows, since we specified the timestamp right after the first insert SQL. Let’s say we had made a huge mistake in an insert or we updated rows without a WHERE clause. When working with an Iceberg data lake, we could simply rollback the table to the state it was before the mistake by using the snapshots. This is a very powerful feature and allows us to recover from mistakes much more easily.

S3 Tables

A relatively new service called Amazon S3 Tables offers S3 storage that is optimized for Apache Iceberg. The data is stored in a different bucket type called a table bucket. Inside the table bucket, there are tables which store data in Iceberg format and they can be queried with query engines such as Amazon Athena, Amazon Redshift and Apache Spark.

Purpose-built for Iceberg

Table buckets are built for analytical data stored in Apache Iceberg format. They offer the same durability, availability, and scalability we’ve come to expect from Amazon S3, but they also provide higher transactions per second and better query throughput.

Automated maintenance and optimization

As mentioned earlier, Iceberg does require some additional overhead as the amount of data and transactions stack up in your data lake. With table buckets, S3 automatically handles maintenance operations, such as file compaction, snapshot management, and orphaned file removal. These operations improve the table’s performance and also reduce storage costs. The automated maintenance can be customized per table and significantly reduces manual overhead.

Conclusion

Apache Iceberg transforms traditional data lakes by bringing essential features like ACID transactions, schema evolution, time travel, and efficient updates that Parquet files alone cannot provide. Its hidden partitioning and performance optimizations make queries faster and more reliable, while its snapshot-based architecture enables powerful capabilities like rollbacks and historical data analysis.

While Iceberg does introduce additional operational overhead through metadata maintenance, snapshot expiration, and file compaction requirements, services like Amazon S3 Tables significantly reduce this burden through automated optimization. For organizations building data lakes on AWS, Apache Iceberg is an incredibly powerful tool that brings database-like reliability and flexibility to S3-based analytics workloads.